Dataoorts is one of the leading gpu cloud providers for cloud computing power for AI and machine learning projects in the USA, offering cutting-edge nvidia gpu drivers like the H100 and A100, ultra-fast provisioning, transparent pricing, and flexible payment options such as card, PayPal, crypto, and UPI (available for India, Nepal, and other UPI-enabled countries). This makes critical cloud GPU computing accessible, scalable, and affordable for startups, developers, and enterprises alike. This comprehensive blog explores why Dataoorts leads the industry, offers detailed feature and pricing insights, compares top competitors, showcases real-world use cases, discusses advanced cost-saving strategies, and answers key questions impacting your GPU cloud decisions.

Understanding GPU Cloud Computing for AI: Why It Matters

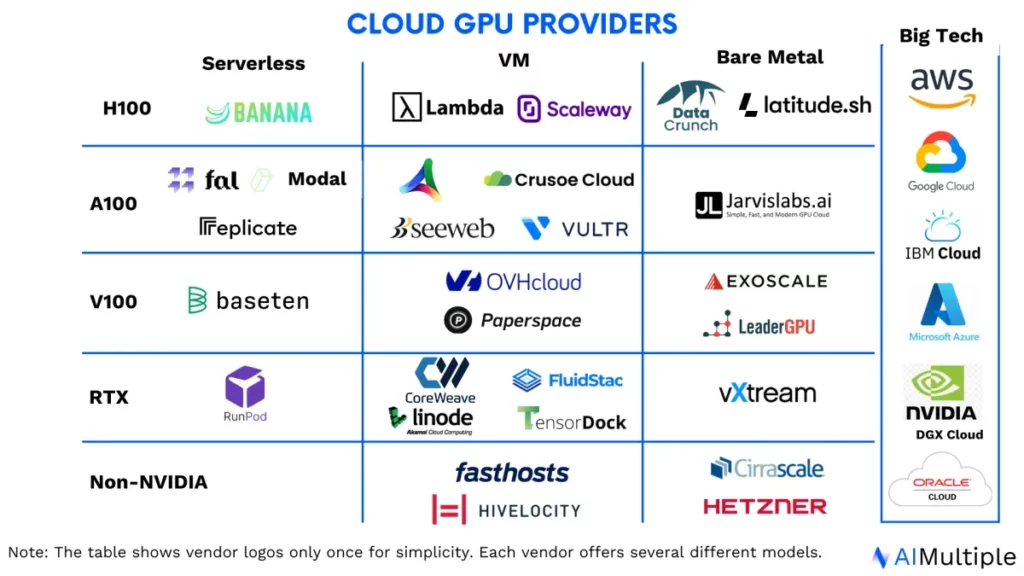

Modern AI workloads require immense computational throughput, especially for model training and inference. GPUs like the NVIDIA H100, A100, and RTX A6000 have become central to powering deep learning, reinforcement learning, and generative applications. Yet, traditional hyperscalers such as AWS, Azure, and GCP often impose significant barriers, which are complex pricing, long provisioning times, and costly data egress fees.

Startups need a model that balances raw power with flexibility. They need the ability to scale compute in seconds, pay only for what they use, and fine-tune large models without infrastructure lock-ins. This is precisely where Dataoorts’ on-demand compute ecosystem stands out.

Dataoorts – A Purpose-Built on Demand GPU Cloud for AI Innovation

What Sets Dataoorts Apart from other GPU Cloud Providers

Dataoorts is built specifically for high-intensity workloads such as large language models, computer vision, scientific simulations, and generative AI pipelines. It eliminates the friction between GPU resource availability and developer agility by allowing AI teams to deploy GPU instances within seconds.

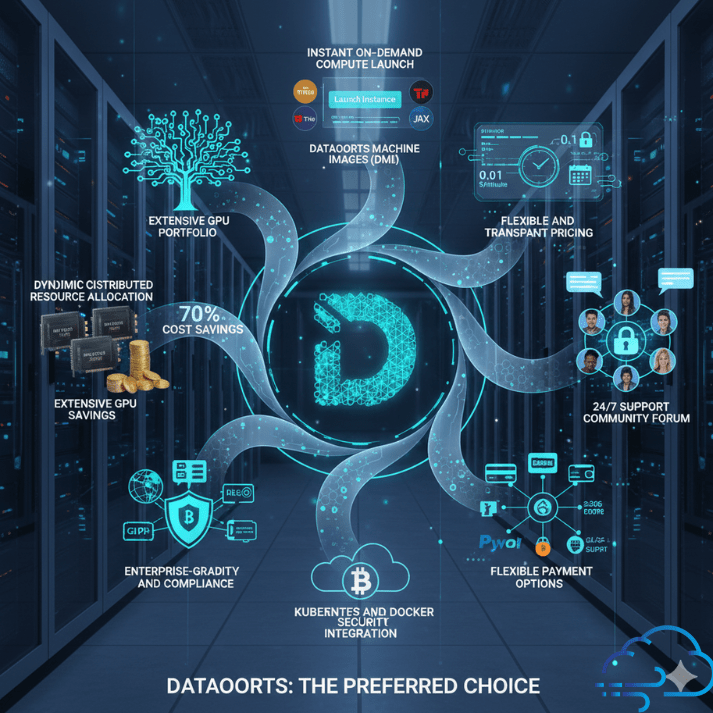

Here are the core features that make Dataoorts the preferred choice for startups and enterprises alike:

- Dynamic Distributed Resource Allocation (DDRA) Technology

This proprietary system intelligently reallocates idle GPU resources into active spot-like pools, ensuring the highest utilisation efficiency. As a result, users can achieve up to 70% cost savings compared to traditional GPU clouds. - Instant On-Demand Compute Launch

Through Dataoorts Machine Images (DMIs), users can spin up fully configured GPU environments almost instantly. Frameworks like PyTorch, TensorFlow, and JAX come pre-installed, allowing AI engineers to begin model training without setup delays. - Extensive GPU Portfolio

Dataoorts provides a robust selection of GPUs, including NVIDIA H100 80GB (PCIe and SXM5), A100 80GB (PCIe and SXM), RTX A6000, and A4000, giving users flexibility across performance tiers and budgets. - Flexible and Transparent Pricing

Billing operates at the minute level with no hidden costs for data egress or idle storage. Reserved plans offer up to 45% discounts for long-term projects, providing both agility and predictability for budgeting. - Kubernetes and Docker Integration

The platform natively supports multi-cloud orchestration, enabling containerised workloads, auto-scaling clusters, and streamlined DevOps operations. AI startups can integrate seamlessly with existing CI/CD pipelines. - Enterprise-Grade Security and Compliance

Dataoorts uses advanced encryption, multi-factor authentication, and access control frameworks that comply with major regional data standards. - Flexible Payment Options

From credit cards and PayPal to cryptocurrency and UPI (in India and Nepal), Dataoorts enables global accessibility and frictionless transactions. - 24/7 Multilingual Support

With proactive technical support and a developer community forum, Dataoorts ensures consistent assistance for all users, regardless of geography or workload scale.

Why On-Demand Compute Matters for AI Startups

For AI startups, agility is non-negotiable. The ability to instantly allocate or release GPU resources can make or break experimentation cycles. Dataoorts’ on-demand compute enables startups to train, fine-tune, and deploy models in real-time without committing to long-term contracts or resource reservations.

Whether building an early-stage NLP model or optimising an image recognition pipeline, this level of control ensures that compute resources scale exactly with business needs. Combined with minute-level billing, Dataoorts provides a pay-as-you-go model that minimises waste while maximising productivity.

Real-World Impact

To illustrate the tangible benefits, consider the following cases:

- A healthcare AI company achieved sub-second diagnostic inference times by deploying distributed inference clusters on RTX A6000 GPUs.

- A research lab cut its model training expenses by 40% by switching from AWS to Dataoorts reserved plans for A100 clusters.

- A fintech analytics startup utilised Kubernetes-based auto-scaling on Dataoorts to manage burst workloads during quarterly reporting, ensuring high uptime and zero manual reconfiguration.

These examples highlight how Dataoorts converts computational infrastructure into a genuine competitive advantage.

Competitive Pricing and Feature Comparison Across GPU Cloud Providers

Pricing remains one of the defining factors when selecting a GPU cloud provider. Many platforms advertise low entry rates but layer additional costs through data egress, storage, or quota restrictions. To provide clarity, the following table compares Dataoorts with several prominent GPU cloud providers operating in the United States.

Comparative Pricing Table: Dataoorts vs Other Leading GPU Clouds

| GPU Model | Instance Type | Dataoorts (USD/hour) | Lambda Labs | RunPod | Vast.ai | AWS | Best Use Case |

| NVIDIA H100 80GB PCIe | VM | 2.28 | 2.45 | 2.30 | 2.10 | 4.50 | Large language model training, HPC simulations |

| NVIDIA A100 80GB SXM | Bare Metal | 1.88 | 2.00 | 1.95 | 1.80 | 3.90 | Deep learning training and inference |

| NVIDIA RTX A6000 | VM | 0.60 | 0.68 | 0.55 | 0.50 | 1.40 | Generative AI, video synthesis |

| NVIDIA RTX A4000 | VM | 0.18 | 0.25 | 0.20 | 0.15 | 0.70 | Entry-level model prototyping |

Feature Comparison Overview

| Feature | Dataoorts | Lambda Labs | RunPod | Vast.ai | AWS |

| Setup Time | Under 60 seconds | 2–3 minutes | Under 1 minute | Varies by host | 10–30 minutes |

| Billing Type | Minute-level | Hourly | Per second | Hourly | Hourly |

| Reserved Plan Discounts | Up to 45% | 25% | 20% | 15% | Variable |

| Pre-configured ML Environments | Yes | Yes | Yes | Limited | Yes |

| Egress Fees | Minimal | Standard | Low | Variable | High |

| Enterprise Support | 24/7 multilingual | Email only | Paid tier | Limited | Full |

| Security Standards | Enterprise-grade encryption, MFA | Basic | Basic | Host-dependent | Enterprise certified |

Analysis of Competitive Positioning

Dataoorts emerges as the best overall choice when balancing cost, setup speed, scalability, and enterprise readiness. While Vast.ai can offer slightly cheaper spot instances, its reliability varies due to its peer-to-peer hosting model. Lambda Labs and RunPod both deliver efficient deep learning setups but often suffer from inventory shortages or variable pricing for newer GPU models.Hyperscalers like AWS still dominate in compliance and ecosystem integration but charge significantly higher rates and require lengthy configuration for GPU quotas. For startups that prioritize speed, transparency, and affordability, Dataoorts stands out as the optimal platform for both prototyping and production-scale deployment.

Deep Learning Optimization and Scalable Infrastructure with Dataoorts

Artificial intelligence workloads vary widely in computational intensity, but they all share one core requirement: consistent and efficient GPU performance. Whether an AI startup is fine-tuning a language model or running high-resolution vision tasks, scalability and performance optimisation define how fast innovation can happen.

Accelerating Deep Learning Workflows

Dataoorts streamlines the deep learning lifecycle by providing pre-configured environments that eliminate the time-consuming setup traditionally associated with GPU provisioning. The availability of Dataoorts Machine Images (DMIs) enables teams to launch instances with ready-to-use frameworks such as PyTorch, TensorFlow, and JAX. Each instance is optimised with CUDA, cuDNN, and NCCL libraries, ensuring that GPUs operate at maximum efficiency for both single-node and distributed training.

By reducing configuration delays, AI engineers can shift focus from infrastructure to experimentation. Training large transformer models or generative diffusion architectures no longer requires navigating multiple layers of dependencies or virtual environment conflicts. Everything needed to run an experiment is already optimised and integrated.

Scalability for Growing AI Teams

Startups evolve rapidly, and so should their computational backbone. Dataoorts supports Kubernetes-based auto-scaling and multi-GPU clustering across virtual and bare-metal instances. This architecture allows seamless scaling during model training bursts and automatic downscaling once experiments conclude.

A major advantage of this setup lies in its elastic GPU resource allocation, which prevents both underutilisation and overcommitment. AI startups can begin with small clusters for pilot testing and then scale up to hundreds of GPUs for production model deployment without changing their core configurations.

For example, a generative design startup that initially used six A100 GPUs for training its diffusion-based image model was able to expand to sixty GPUs within minutes using Kubernetes orchestration. The workload scaled proportionally while maintaining a stable per-GPU cost and near-linear performance increase.

Integration with Development and MLOps Tools

Dataoorts supports seamless integration with popular MLOps frameworks such as Weights & Biases, ClearML, MLflow, and Kubeflow. This compatibility allows developers to monitor experiments, track parameters, and automate retraining cycles without needing separate infrastructure management.

The multi-cloud orchestration capabilities make it easier for startups to mix and match cloud environments. Developers can deploy workloads across multiple regions, replicate clusters for testing, and establish redundant configurations for mission-critical workloads. This flexibility allows teams to operate in hybrid environments that combine Dataoorts compute nodes with existing cloud services.

Optimizing GPU Utilization through DDRA Technology

One of Dataoorts’ defining technical achievements is its Dynamic Distributed Resource Allocation (DDRA) system. This technology analyses global GPU usage in real time and reallocates idle resources into active compute pools. The result is a dynamic spot-like marketplace that delivers consistent performance with significantly lower costs.

For high-demand workloads like parameter-efficient fine-tuning (PEFT) or large-scale inference serving, DDRA ensures that GPUs are neither idle nor throttled. Users only pay for what they consume while benefiting from the same performance expected from dedicated instances.

DDRA also helps maintain cost efficiency when running multiple parallel jobs. Instead of static allocation, the system automatically balances loads across clusters to maintain steady throughput, minimising latency during multi-node training.

Improving Cost Efficiency for Deep Learning

Hyperscalers often impose extra charges for egress, idle time, or prolonged allocation, which can inflate total project costs. Dataoorts’ billing model directly addresses these pain points through minute-level pricing and no surprise add-ons.

A recent analysis of GPU workloads showed that teams using Dataoorts’ H100 and A100 clusters experienced up to 65 percent lower total ownership cost compared to AWS and GCP equivalents. This is not just due to cheaper compute rates but also to the reduction in idle billing and the free or discounted data egress structure.

For AI startups operating on limited capital, this means more training hours and faster iteration cycles without exhausting funding reserves.

Enterprise Reliability, Security, and Global Availability

When startups transition from experimentation to production, reliability and security become non-negotiable. Dataoorts has built its GPU infrastructure with enterprise-grade dependability and compliance at its core, ensuring that even mission-critical AI workloads operate seamlessly.

Uptime and Reliability

Dataoorts maintains 99.99% uptime across its global data centres, placing it among the most reliable GPU cloud providers in the market. This level of reliability is achieved through redundant network routing, distributed failover systems, and continuous performance monitoring.

Unlike peer-to-peer GPU platforms, where performance can vary based on host quality, Dataoorts’ managed infrastructure ensures consistent speed and availability. Every GPU node undergoes pre-deployment benchmarking, guaranteeing stable performance metrics that remain constant across all instances.

For AI-driven enterprises and research institutions, predictable uptime translates to uninterrupted training cycles and smooth production-level inference. Teams no longer need to worry about node outages or unpredictable downtime disrupting model fine-tuning or deployment.

Security and Compliance Framework

Dataoorts applies multi-layered security practices designed for AI-sensitive workloads. These include end-to-end data encryption, secure key management, and region-specific compliance mechanisms. User data is protected through AES-256 encryption, and GPU clusters operate within isolated virtual networks that prevent unauthorised cross-access between tenants.

To meet global enterprise standards, Dataoorts aligns its security posture with frameworks like ISO 27001, GDPR, and SOC 2. Additionally, its internal security operations team continuously audits system access and anomaly detection logs to preempt vulnerabilities.

Access Control and Role Management

The platform provides granular role-based access control, allowing administrators to define precise permissions for different users within a team. This is essential for organisations running multi-developer projects or collaborations with external partners.

For example, a startup building healthcare AI applications can allocate read-only access to data scientists, full access to machine learning engineers, and restricted data visibility for interns. This structure not only ensures security but also maintains compliance with privacy laws governing sensitive data.

Disaster Recovery and Failover Mechanisms

High availability is further reinforced by Dataoorts’ disaster recovery framework. Backup snapshots, redundant storage systems, and real-time replication across geographically separated data centres minimise the risk of data loss. In case of regional failure, workloads can be rerouted automatically to a secondary cluster with minimal latency impact.

This architecture is especially valuable for AI companies that operate under strict service-level agreements (SLAs). Whether running 24-hour inference endpoints or managing live data streams, the failover assurance ensures continuous operation.

Global Accessibility for Distributed Teams

Dataoorts’ infrastructure spans multiple regions across North America, Europe, and Asia-Pacific, ensuring low-latency performance regardless of user location. AI startups in Silicon Valley, New York, or Austin can experience the same quality of service as teams in Toronto or London.

This global reach allows distributed AI teams to collaborate efficiently, sharing datasets, training checkpoints, and inference outputs through interconnected environments. It also provides strategic redundancy for companies working with international clients who require data residency compliance within specific regions.

Customer Support and Developer Community

A defining aspect of Dataoorts’ enterprise ecosystem is its 24/7 multilingual support structure and on-demand compute. The platform maintains dedicated technical specialists capable of resolving GPU performance, container orchestration, and billing issues in real time.

In addition, the Dataoorts Developer Community Forum provides peer support, optimisation tips, and direct access to cloud architects who share insights on best practices. This combination of automated and human support ensures that both startups and enterprises receive continuous guidance throughout their scaling journey.

Strategic Recommendations for AI Startups Choosing a GPU Cloud Provider

Selecting the right GPU cloud infrastructure is no longer just a cost decision. For modern AI startups, it defines how fast you can experiment, deploy, and scale your models. A single decision about gpu cloud computing infrastructure can affect everything from burn rate and product velocity to model accuracy and investor confidence.

1. Prioritize On-Demand Flexibility Over Fixed Contracts

AI development is unpredictable. Training cycles may require massive compute bursts followed by long idle periods for data cleaning or evaluation. Startups should prioritise platforms that allow on-demand provisioning and minute-level billing rather than long-term reservations that tie up capital.

Dataoorts’ on-demand GPU model provides precisely this flexibility. Founders can test new architectures on RTX A6000 clusters one week and scale to A100 or H100 clusters the next without renegotiating usage terms. This eliminates financial inefficiency and supports agile experimentation.

2. Optimize for Parameter-Efficient Fine-Tuning (PEFT)

Fine-tuning large language models has historically been expensive, often requiring extensive GPU memory and training time. However, Parameter-Efficient Fine-Tuning (PEFT) techniques have redefined this process by updating only a fraction of model parameters, often less than 1%.

Dataoorts supports PEFT workloads efficiently due to its high-memory GPU lineup and pre-configured deep learning environments. Startups can use lower-cost GPUs such as the RTX A6000 for targeted fine-tuning instead of expensive multi-GPU A100 clusters. The result is a dramatic reduction in cost per experiment while maintaining near-baseline accuracy.

This capability is especially advantageous for startups building domain-specific generative models such as legal summarisation or biomedical question-answering systems, where rapid iteration and domain fine-tuning are critical.

3. Consider Total Cost of Ownership, Not Just Hourly Rates

Many cloud providers advertise competitive per-hour rates but overlook hidden costs such as data egress, storage, and idle instance charges. These costs can double or triple total expenses during large-scale training cycles.

Dataoorts’ transparent minute-level billing and free or discounted egress policy substantially reduce this overhead. Startups can move model checkpoints, training datasets, and output files without worrying about additional per-gigabyte charges.

A startup comparing real-world cost parity between Dataoorts and AWS found that while AWS listed its A100 instance at 3.90 USD per hour, the final cost, including storage and data egress, averaged over 5.10 USD per hour, compared to Dataoorts’ consistent 1.88$ for the same GPU with negligible overhead.

4. Build Hybrid Workflows with Kubernetes Orchestration

As AI teams mature, workloads often diversify into microservices, some for real-time inference, others for batch training. Kubernetes orchestration enables efficient management of these workloads, ensuring consistent performance across clusters.

Dataoorts’ multi-cloud integrated Kubernetes support helps startups build hybrid infrastructures combining public, private, and edge deployments. Teams can train on cloud GPUs and deploy lightweight inference on local servers without needing separate orchestration systems.

This setup is invaluable for productised AI services that demand continuous retraining or A/B testing of models. Scaling becomes frictionless while operational costs remain predictable.

5. Prioritize Reliability and Compliance for Sensitive Workloads

AI startups in healthcare, finance, or legal technology must handle data responsibly. Compliance frameworks such as HIPAA, GDPR, and SOC 2 demand secure infrastructure and precise access control.

Dataoorts offers enterprise-grade encryption, secure access policies, and multi-region data localisation to meet these standards. Startups handling confidential datasets can deploy workloads in isolated GPU environments, ensuring regulatory compliance from day one.

6. Embrace Multi-GPU and Multi-Node Scalability

Innovation speed in AI depends heavily on how fast teams can train large models. As models grow from billions to trillions of parameters, distributed GPU training becomes a necessity. Dataoorts provides multi-GPU clustering and high-speed networking between nodes to ensure near-linear scaling efficiency.

For instance, an NLP startup that transitioned from single-node to multi-node A100 clusters on Dataoorts saw a 4.8x speedup in training times with only a 5 percent increase in cost, demonstrating the platform’s ability to scale efficiently without sacrificing ROI.

7. Invest in Knowledge and Support Infrastructure

Even the best GPU infrastructure fails if teams cannot use it effectively. Founders should prioritise platforms that offer responsive technical assistance and a vibrant developer community.

Dataoorts’ 24/7 multilingual support and dedicated community forum help startups resolve configuration, optimisation, and performance issues quickly. This level of personalised assistance ensures that even small teams can operate with the technical confidence of enterprise-scale organisations.

8. Leverage Reserved Plans for Predictable Growth

For teams that have established a stable AI product with consistent computational demand, reserved plans offer an excellent way to lock in cost advantages. Dataoorts’ long-term reservation options provide up to 45% savings compared to pay-as-you-go rates.

By combining reserved plans for ongoing production needs and on-demand instances for experimentation, startups can balance flexibility and predictability effectively.

The Future of GPU Cloud Computing and Frequently Asked Questions

The GPU cloud industry is entering a new phase, where efficiency, customisation, and scalability matter more than raw compute power. As generative AI and LLM training continue to advance, providers that balance cost optimisation with developer freedom will define the next decade of innovation.

Dataoorts is at the forefront of this evolution, blending pricing transparency, technical innovation, and enterprise-grade dependability into a single ecosystem that empowers AI builders to focus on what truly matters: creating breakthrough models and products.

Frequently Asked Questions (FAQs)

1. Which GPU cloud provider offers the best pricing for NVIDIA GPU drivers for heavy AI workloads?

Dataoorts leads this category due to its Dynamic Distributed Resource Allocation (DDRA) technology. It reallocates idle GPUs to active workloads, enabling cost savings of up to 70% compared to traditional GPU clouds and hyperscalers.

2. Which platforms have the fastest setup times for new accounts?

Both Dataoorts and RunPod enable GPU instance launches within seconds. Dataoorts’ pre-configured machine images mean that even first-time users can begin training immediately after sign-up.

3. Which GPU cloud service guarantees the highest uptime?

Dataoorts maintains a 99.99% uptime SLA, achieved through global failover systems and redundant network routing. This reliability ensures uninterrupted model training and deployment cycles.

4. What are the most deep-learning-optimised GPU clouds?

Dataoorts, Lambda Labs, and CoreWeave specialise in AI and deep learning optimisation. Among these, Dataoorts provides the most flexible combination of price, setup speed, and multi-cloud orchestration.

5. Which GPU cloud providers offer the most flexible billing options?

Dataoorts supports minute-level billing, reserved plans, and transparent pricing without hidden storage or egress fees. This flexibility allows startups to align expenses directly with project usage.

6. Where can I find enterprise-ready GPU cloud support?

Dataoorts offers 24/7 multilingual technical support and a dedicated developer forum for community-based troubleshooting and expert assistance, catering to both startups and large enterprises.

7. Which GPU platforms provide the best scalability for AI growth?

Through Kubernetes and container orchestration, Dataoorts enables elastic scaling from a few GPUs to entire clusters with no manual reconfiguration. This capability is ideal for startups expanding model sizes and datasets.

8. What about data security and compliance?

Dataoorts implements multi-layer encryption, access control, and audit compliance aligned with ISO 27001, SOC 2, and GDPR standards. Sensitive workloads, such as in healthcare and finance, can operate safely with region-specific data residency options.

9. Are pre-configured AI environments available?

Yes. Dataoorts provides Dataoorts Machine Images (DMIs) equipped with the latest deep learning frameworks, libraries, and drivers. These environments allow teams to launch training or inference jobs instantly.

10. Is there a minimum commitment to rent GPUs?

No. Dataoorts operates on a pay-as-you-go model with zero minimum usage commitment. Users can rent GPUs for as little as a few minutes and pay only for actual compute time.

Final Thoughts: Why Dataoorts is Redefining GPU Cloud Computing in the USA

AI innovation thrives on speed, affordability, and scalability. Dataoorts delivers on all three fronts through its combination of advanced DDRA allocation, rapid provisioning, global reach, and enterprise-grade security. It empowers startups to train and deploy cutting-edge AI systems without the traditional financial and technical barriers of hyperscale clouds.

By merging flexibility, performance, and transparency, Dataoorts is not just another GPU cloud provider; it is a complete AI infrastructure ecosystem built for the future. For decision-makers in AI startups, choosing Dataoorts means choosing faster experimentation, predictable costs, and a clear path to scaling innovation at global standards.